How much water can flow into the Netherlands via the large rivers?

How much water can flow per unit time to the Netherlands in the most extreme situations via a large river like the Rhine? The answer to that question becomes more important, because the climate is changing and standards are becoming stricter. There is a new method to determine the extremely high discharges, in which the behavior of the river upstream of the Dutch border near Lobith is taken into consideration.

In the Netherlands, safety against flooding by rivers, besides the strength of the flood defenses, is strongly influenced by the discharges of the Rhine and Maas at the border near Lobith and Eijsden, respectively. The important question has always been: how much water will then be involved.

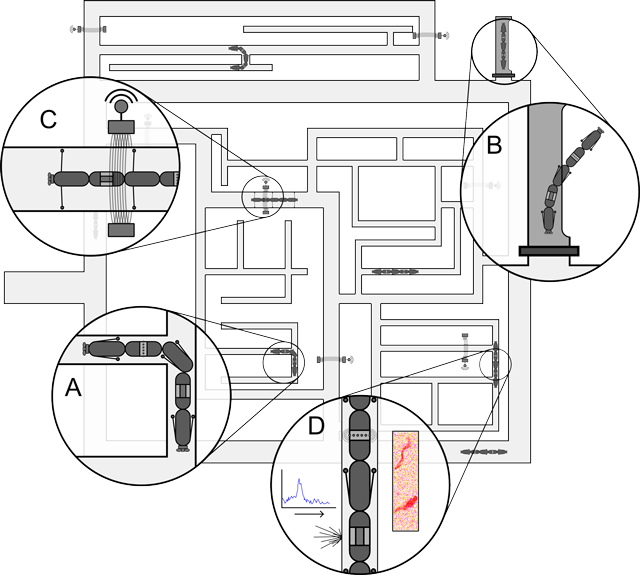

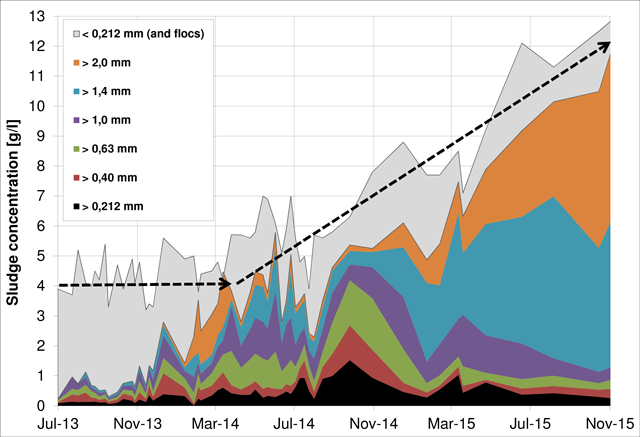

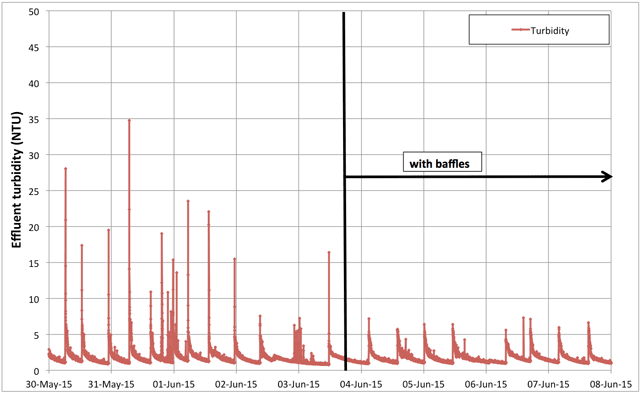

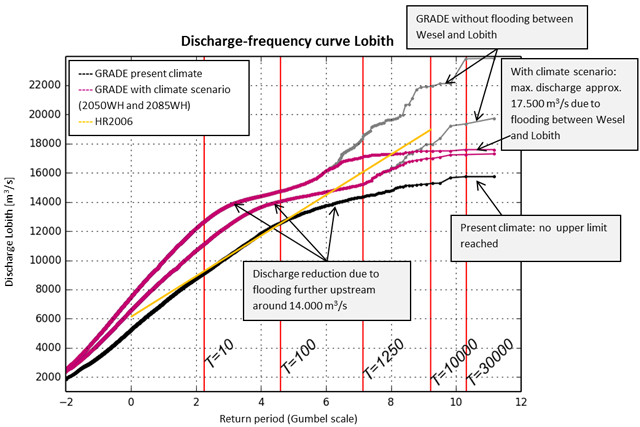

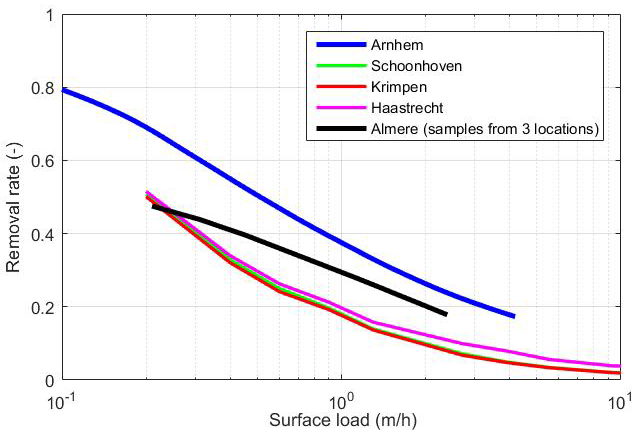

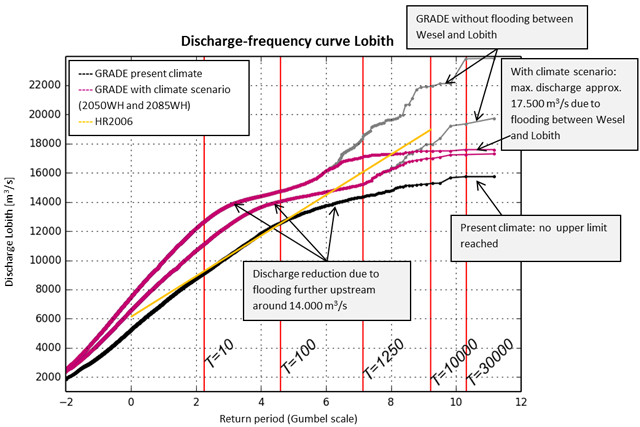

The discharge is directly linked to its probability of occurrence: average discharges are common, but extremely high and extremely low flows don't occur all that often. The occurrence of extremely high discharge is modeled with a probability distribution of the annual peak discharges, sometimes also called the ‘working line’ (see Figure 1).

Because absolute safety cannot be guaranteed, we in the Netherlands currently work with a peak discharge with a probability of occurrence that we find acceptable, the so-called

design discharge. Using a physical-mathematical river model, this is then translated into water levels along the rivers based on which we then design the dikes, the

design water levels.

New insights regularly lead to discussions on the height of the design discharge and whether there is an upper limit as to how much water can flow to the Netherlands via the Rhine. What happens with the embankments in Germany plays an important role.

Figure 1 - Various discharge working lines at Lobith. HR2006 represents the working line based on statistical extrapolation, and was used to date to determine design discharge at Lobith

Figure 1 - Various discharge working lines at Lobith. HR2006 represents the working line based on statistical extrapolation, and was used to date to determine design discharge at Lobith

There are areas with an almost unlimited catchment capacity along the part of the Rhine just upstream from Lobith and in this part of the Rhine, the embankments will be flooded at extremely high water levels, or possibly even breach. If those embankments are raised, the discharge capacity will increase. Germany plans to raise those embankments, and the planning is for the work to be completed around 2025. The height of these embankments will then determine the upper limit of the discharge based on which the flood defences will be designed in the Netherlands.

Changes

Meanwhile, the Netherlands has decided to take on a different approach to safety against flooding. Every embankment section is given a maximum allowable probability of flooding, based on an economic assessment of the potential damage and the investment costs in order to prevent it, the probability of loss of life due to flooding, the probability of large numbers of victims in a single flood event and failure of vital infrastructure.

These standards are set out in the revision of the Water Act, which takes effect from 1 January 2017. At many river dikes, this leads to higher standards (lower probability of flooding). The new standards also mean more differentiation in standards between different embankment sections; along the branches of the Rhine, the new flooding probability standard varies from on average once in 300 years to on average once in 100,000 years.

In addition, there is a more explicit focus in the new design tools on geotechnical failure mechanisms, such as piping or instability that can lead to a flood.

The new approach has consequences for the information we require on extreme high discharges.

Firstly, there are now multiple factors (failure mechanisms) that can lead to failure, each with their own probability of failure. The sum of those failure probabilities must be limited to at most the same as the flooding probability standard of the embankment sections. This means each of these partial probabilities must be less than the standard. For each of the partial probabilities, we must therefore look at more extreme events than we currently do.

In addition, the new standards correspond mostly with much lower failure probabilities per embankment section than the current probability of exceedance of the water level (once in 1,250 years). This means we have to look at much more rare events, sometimes with a probability as low as once in 100,000 years on average.

Secondly, the so-called 'length effect' also plays a role: due to geotechnical uncertainties in particular, with identical embankment sections, a longer stretch of embankment has a greater probability of failure than a shorter stretch of embankment. After all, the probability of a relatively weak spot being present in a longer embankment section is greater than in a shorter section.

Thirdly, the duration of the discharge wave is of interest for some of those geotechnical failure mechanisms. For example, a rapidly declining water level can lead to sliding of the outer slope of the embankment.

In short, as far as the river discharges at the border are concerned, we need to know more, and about more extreme discharge events. The current practice of statistical extrapolation of peak discharges measured over approximately 100 years is no longer adequate.

GRADE

The Directorate General for Public Works and Water Management, along with Deltares and KNMI, have developed a new system to model extreme high discharges: GRADE (Generator of Rainfall and Discharge Extremes). This is based on long-term time series of temperature and precipitation (derived from meteorological data). Each of these sets is imported into a hydrological model (HBV) , which calculates the inflow into the river. The inflow then serves as input for a hydrodynamic model (SOBEK), that calculates the propagation of the discharge wave through the river, and eventually the discharge that enters the Netherlands at Lobith.

By repeating this calculation many times and by selecting the most extreme events from the results, a picture is obtained of the most extreme discharge waves, of both the peak discharges and the development in time. The main advantage is that it is possible to include floodings in Germany or Belgium in the model, but also emergency measures, such as laying of sand bags.

In principle, with GRADE we have a method to answer the question as to whether there is an upper limit of the discharge at Lobith. After all, GRADE includes flooding in Germany. However, SOBEK is a one-dimensional model, that is, the entire cross section of the river is compressed into a single line with 'containers' for water storage/flooding linked to it. It does however take into account that flooded areas can be 'full' along large parts of the Rhine, so they no longer have a reducing effect on the water level downstream. In addition, there are schematization effects, for example when outlining flooding just upstream from the Dutch border, between Wesel and Lobith.

From additional analyses (recommendation by the Expertise Network on Flood Protection (ENW): 'Does the Rhine have a maximum discharge at Lobith?’) it appears these floodings between Wesel and Lobith are still strongly underestimated in GRADE, so the calculated extreme discharges are overestimated. Two-dimensional models that will solve this problem are still under construction.

Estimating the upper limit

In order to reach a reliable estimation now already, all the SOBEK schematized profiles in GRADE were inspected one more time on the basis of digital elevation maps, concluding that the greatest sensitivity lies in floodings between Wesel and Lobith.

A more accurate consideration of the water flow over the embankments there, based on the proposed embankment heights in 2025, led to the conclusion that the discharge at Lobith will most likely not be able to exceed 17,500 cubic meters per second, not even under very extreme conditions (the current design discharge is 16,000 cubic meters per second).

If the embankments between Wesel and the border were infinitely high and strong, the peak discharge at Lobith may rise up to 23,000 cubic meters per second, assuming the KNMI'14 climate scenario WH in 2085. The last stretch of embankments before the border therefore acts as a kind of safety valve for the Netherlands.

The water that flows over the German embankments can however cause a lot of damage and misery, possibly even fatalities. It can reach the Netherlands 'through the back door'. Flood calculations show that the water through the Achterhoek can even reach Zwolle. Truncating the extreme discharge waves also doesn't mean this will no longer pose any problems for the river embankments if they are high enough. With a truncated wave, the peak after all lasts longer, leaving a greater probability of weakening the embankments.

Some German embankments can already be flooded at 14,000 cubic meters per second, for example between Bonn and Düsseldorf. These areas have a limited volume, however, so with extreme discharges they are already full before the peak arrives and their the truncating effect is lost. The levelling of discharges around 14,000 cubic meters per second, then rising again is visible in the GRADE results (Figure 1), and was also indicated in calculations with a two-dimensional flooding model. So this doesn't provide a real upper limit of the discharge at Lobith.

Conclusion

Floodings in Germany ensure a limitation of the discharge reaching the Netherlands. With a discharge from around 14,000 cubic meters per second, these floodings ensure a reduction in discharge, but at higher discharges, the flooded areas fill up, so the positive effect is lost. However, at discharges of approximately 17,500 cubic meters per second, areas just before the Dutch border (between Wesel and Lobith) will also be flooded, with hardly any limitation in the storage capacity.

This creates an upper discharge limit at Lobith. The truncation of the extreme discharges at Lobith is therefore good news for flood protection in the Netherlands, but it's no reason to celebrate yet. The GRADE approach with the inclusion of floods provides a realistic approach of the discharges at Lobith and allows for significantly lower dikes compared to the statistical extrapolation.

This article is based on GRADE research by the Department of Public Works and Water Management, Deltares and KNMI and on a recent recommendation of the Expertise Network on Flood Protection: ‘Heeft de Rijnafvoer bij Lobith een maximum?’ (Does the Rhine have a maximum discharge at Lobith), August 2016 (www.enwinfo.nl)

Matthijs Kok

(Expertise Network on Flood Protection)

Joost Pol

(HKV)

Huib de Vriend

(Expertise Network on Flood Protection)

Summary

The design discharge' plays an important role in flood protection along the rivers. So far, this discharge was based on statistical extrapolation of measured discharges at Lobith. This method has the disadvantage that it doesn't consider the behavior of the river upstream from Lobith during discharges that have never been observed so far.

The Department of Public Works and Water Management has developed a new model system for modeling of extreme high discharges in rivers: GRADE (Generator of Rainfall and Discharge Extremes). The GRADE approach including floodings in Germany provides a more realistic approach of the extreme discharges at Lobith and allows for significantly lower flood defenses compared to the statistical extrapolation.

^ Back to start